The ship of ThESIus

Greetings, developers.

Replacing RabbitMQ with… RabbitMQ?

As we briefly touched upon in the EVE Evolved dev blog, one of our goals is to get ESI better connected to the Quasar ecosystem. Currently, ESI connects to the monolith via two distinct channels; sending Quasar protobuf messages, and sending ‘old’ JSON requests. Both of these go over RabbitMQ. If we wanted to upgrade this to a full on Quasar connection, the protobuf messages would be almost plug-and-play, while the JSON requests would all need to be migrated as protobuf messages. This migration process is one we kind-of want to avoid as a whole; this can easily take several months to complete, without bringing new functionality to the table.

Luckily, we don’t have to do this right away. Our friends at Team Tech Co have been working on an adapter service for Quasar to simplify the connectivity flow to the monolith. The adapter takes in messages from RabbitMQ, and wraps the JSON messages into a Quasar protobuf message. This is then sent to the Quasar gateways. Existing services would not notice anything, as they would still be talking over RabbitMQ. But processing on the monolith side suddenly becomes easier to deal with. Additionally, because of this JSON wrapping, new endpoints can skip the RabbitMQ all together and directly talk to the Quasar gateways.

We will be testing this new adapter on and off in the coming week(s). Last Monday was the first test, where we found out several (even older) JSON requests were timing out. A fix is in the works, and once done, you can expect another switch. What is a nice detail that switching the adapter on/off is done at runtime. This means there is no downtime involved while switching. In result we feel more confident switching on/off, as when things go wrong we can simply switch back.

A gateway to ESI

Another part of the puzzle is to modernize and unify most of the ESI infrastructure. In practice, this means using a Quasar-enabled template as base for new ESI services and endpoints.

The first service we picked up, is what we call the API Gateway. This will be the first service that picks up your HTTP Request. It checks whether it is a legit request, if you are banned or not, if you are authorized to request the resource, etc etc. And if that all succeeds, it sends your request through to the endpoint fetching your data. This keeps the endpoint small and simple, while we can be sure all traffic we let through is legit.

Currently there is already such an “API Gateway”, called the ESI router. But for several reasons we decided to not convert that router into our API Gateway:

The current router consists of a Python and a Go part. While we don’t mind either language, mixing languages adds complexity. This is more error-prone, and more difficult to maintain.

At the same time, it has no integration with Quasar itself. We had a choice: build this out manually, or switch to a Quasar-enabled template and build on top of that.

The current router does a lot of different things, which makes making changes tricky. One thing can impact the other easily. We are a fan of the Unix philosophy. So instead, we are splitting the responsibility of the ESI router over several services.

Speaking of the ESI router doing a lot of things. Some of these parts could really use sunsetting (like SSOv1 tokens) or implemented in a way that’s more consistent with the rest of the EVE/Quasar ecosystem (like A/B testing).

ESI is currently based on the Swagger 2.0 specs, but we really want to upgrade to OpenAPI 3.1. Neither part of the current ESI router supports that. This would have to be migrated somehow. More on that a bit later in this post.

This gateway will be starting out as a “simple” reverse proxy; routing all incoming requests into the existing ESI infrastructure. This is then picked up by the ESI router, and distributed to the appropriate endpoints. Over time we will be moving all the functionality from the ESI router to new services, eventually removing it completely. And soon, once enough functionality has been moved over, we will start with the fun: gradually rolling-out new functionality.

It might seem madness to add another layer of complexity to ESI, but one of our main requirements is that we want to be able to improve ESI without breaking ESI. This means that we want to leave existing ESI endpoints as intact as possible, so that the risk of regressions is as low as possible. Think of it as replacing the engine of a car that’s going 200km/h (yes, that is above the speed limit)… We can’t just yoink out the engine and put in a new one. It requires finesse.

How do we get here

Today we kicked it off with transition the DNS records for esi.evetech.net to be switchable between the new API Gateway and the ESI router. At a later date, we will use this switch to change traffic from the ESI router to the API Gateway. As the API Gateway simply routes all traffic back to the ESI router, we don’t expect any issues. But if they happen, we can use this switch to revert back to the old situation. And with the magic of very small TTLs, impact to both states should be minimal on third party applications.

In order to check if the API Gateway was going to be ready, we had to formulate some criteria on what - to us - constitutes as production-ready. The routing engine of the new API Gateway is based on Kubernetes' Gateway API, and is configured in a different way from the ESI router. This meant that we had to double-check traffic going into ESI to ensure that the API Gateway agreed with the existing routers on how traffic was routed. We confirmed this by taking hours worth of access logs from ESI, and letting the API Gateway replay this.

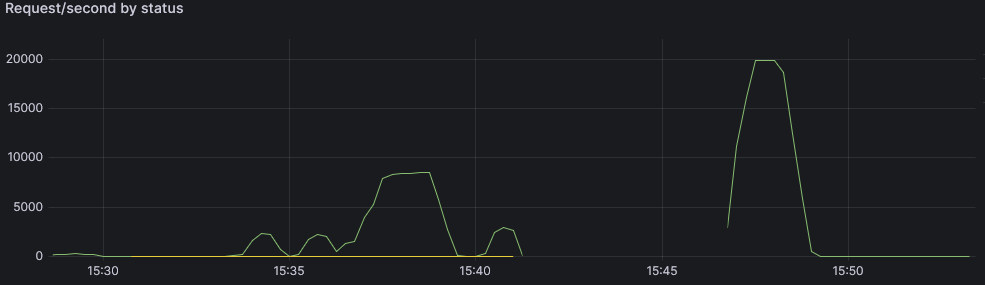

Knowing that traffic gets routed the correct way is one thing, but we also had to be certain that the amount of traffic wouldn’t be an issue for the API Gateway. We tested this partially while developing; making sure that new code being introduced didn’t reduce performance by unacceptable levels. We also tested this on our live cluster to ensure the hardware could held up too. The results of these made us very happy: it shows that a cluster of half the size of what ESI runs could handle twice the amount of requests/s, so this leaves ample room for more features.

One of the other things we saw the opportunity to tackle while working on the API Gateway: zero-downtime deployments. Current deployments drop more than a few connections on the ground. This results in 500 errors for you, and that effects can last somewhere from a few seconds to a few minutes. So, we delved into the wonderful world of Kubernetes; to make sure that once and for all we can deploy without you noticing. And we succeeded (for the API Gateway, that is). The recipe for success? Three things:

Graceful shutdown: when we are asked to shut down, stop accepting new connections, but still handle all the current ones.

Delayed shutdown: when we are asked to shut down, wait for a bit for all Load Balancers and Services to understand we will be shutting down.

Rolling Upgrade in combination with Liveness and Readiness Probes: first start a new instance, wait for it to be started and accepting traffic, shift traffic to the new instance, and only then shut down the old instance. Repeat this till all instances are done.

It does mean deployments now take a bit longer (~5 minutes), but at least it keeps on serving all requests at full speed without dropping a single one of them. This makes us much more comfortable deploying new versions. Especially in a critical part that the API Gateway is. In result, we can (and will) deploy smaller changes more frequent. Basically: we implemented CI/CD. Worth a celebration.

What’s next

As mentioned above, we will upgrade ESI to support both OpenAPI 3.0 and 3.1. Currently, ESI routes are being generated from parsing Python docstrings with YAML to describe the endpoint. This leads to some confusing experiences while working on ESI endpoints, as both code and documentation become leading on what happens during runtime. And if either is not agreeing it means the endpoint behaves inconsistent or just outright fails.

We mean to address this by switching to a code-first way of defining endpoint, and using the Huma framework to assist with this. A comparison.

Current ESI: def status_v2(request_context): """/status/: get: description: EVE Server status summary: Retrieve the uptime and player counts tags: - status responses: 200: description: Server status examples: application/json: start_time: 2017-01-02T12:34:56Z players: 12345 server_version: "1132976" schema: type: object required: - start_time - players - server_version properties: start_time: type: string format: date-time description: Server start timestamp players: type: integer description: Current online player count server_version: type: string description: Running version as string vip: type: boolean description: If the server is in VIP mode """ return { "start_time": now, "players": 100, "server_version": "1.0.0", "vip": true, }

How it looks with Huma: var operation = huma.Operation{ OperationID: "get_status", Method: http.MethodGet, Path: "/status/", Summary: "Retrieve the uptime and player counts", Description: "EVE Server status", Tags: []string{"status"}, } type ServerStatusResponse struct { StartTime time.Time `json:"start_time" example:"2017-01-02T12:34:56Z"` Players int `json:"players" example:"100"` ServerVersion string `json:"server_version" example:"1132976"` IsVIPMode bool `json:"vip,omitempty"` } func ServerStatus(ctx context.Context, input *struct{}) (*ServerStatusResponse, error) { return &ServerStatusResponse{ StartTime: time.Now(), Players: 100, ServerVersion: "1.0.0", IsVIPMode: true, }, nil }

This new method is easier to read for developers, without requiring domain knowledge of OpenAPI/Swagger. But it is also completely code-based, and because Go is a typed language, we get a compiler that tells us off when we are doing things wrong for free. Less bugs for you, easier development for us!

We are looking forward to all these changes, and we hope you do too!

If you have any questions or comments on this blog post, you can find us in the usual place - #3rd-party-dev-and-esi on the EVE Online discord.

That’s all Folks!

- CCP Pinky and CCP Stroopwafel

PS: Stroop is still planning to take over the world the best way to transport a 747 full of stroopwafels to Iceland.